Getting Started With Analytics 2.0

- Last updated

- Save as PDF

Table of Contents

- Procore Analytics Cloud Connector

- Begin Setup

- Choose A Data Connection Method

- Connect to Power BI Desktop

- Connect to SQL Server Using Python (SSIS)

- Connect to SQL Server Using Python Library

- Connect to SQL Server Using Python Spark

- Connect to ADLS Using Azure Functions

- Connect to ADLS Using Python

- Connect to ADLS Using Spark

- Connect to Fabric Lakehouse Using Data Factory

- Connect to Fabric Lakehouse Using Fabric Notebooks

- Connect to SQL Server Using Azure Functions

- Connect to SQL Server Using Data Factory

- Connect to SQL Server Using Fabric Notebook

- Connect to Databricks

- Connect to Snowflake Using Python

- Connect to Amazon S3 Using Python

- Build Your Own Connection

- Connect to BigQuery

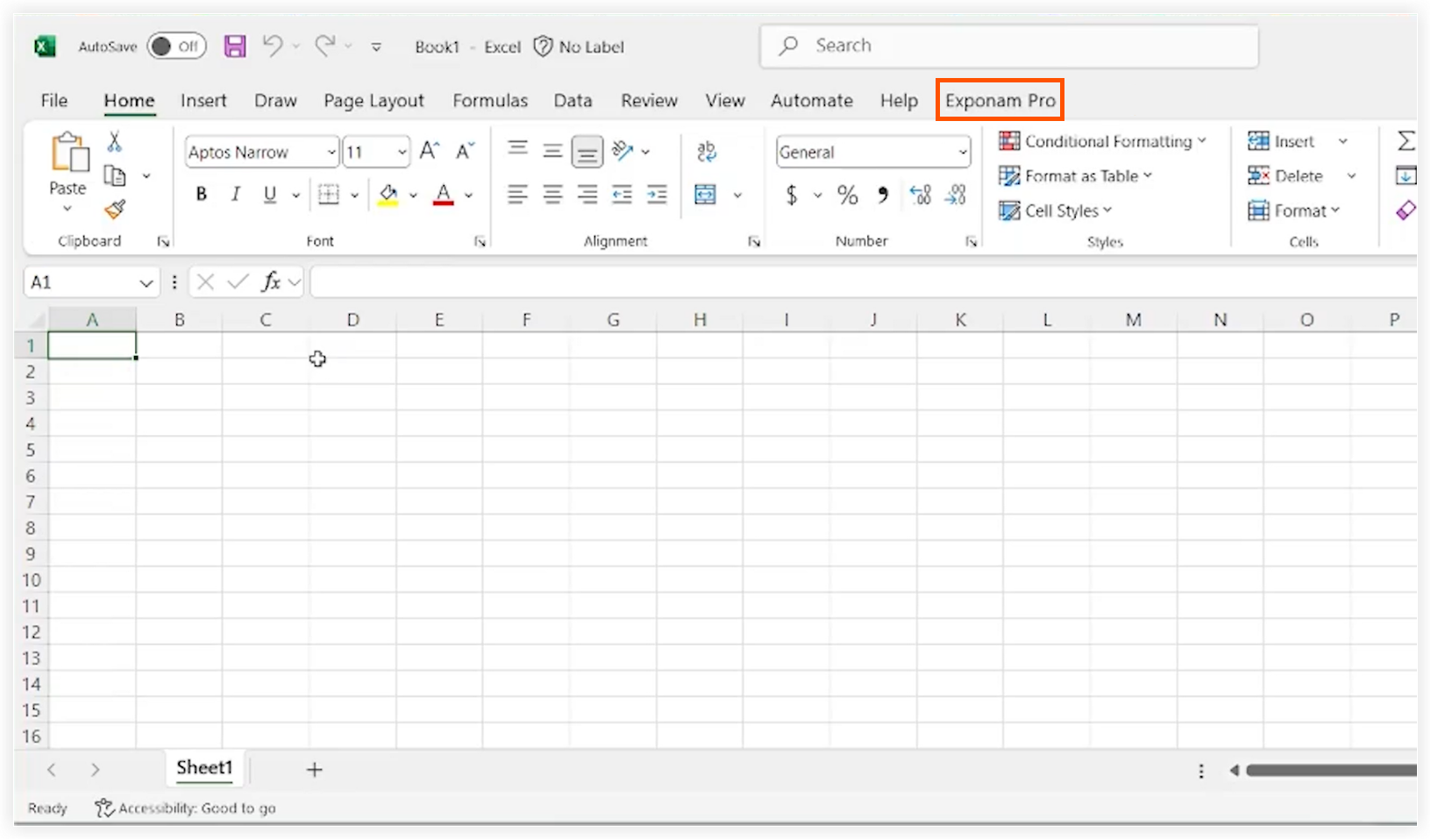

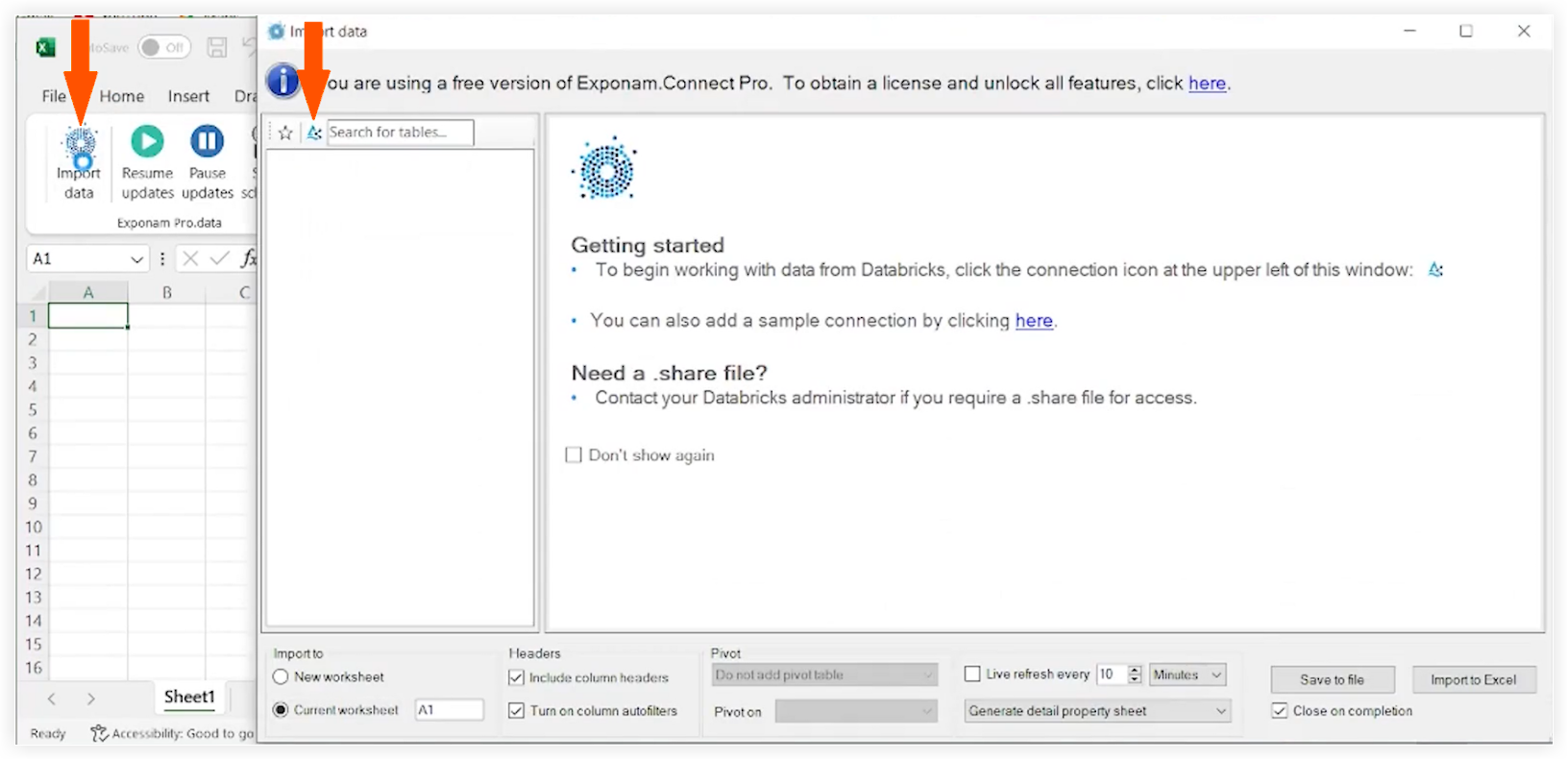

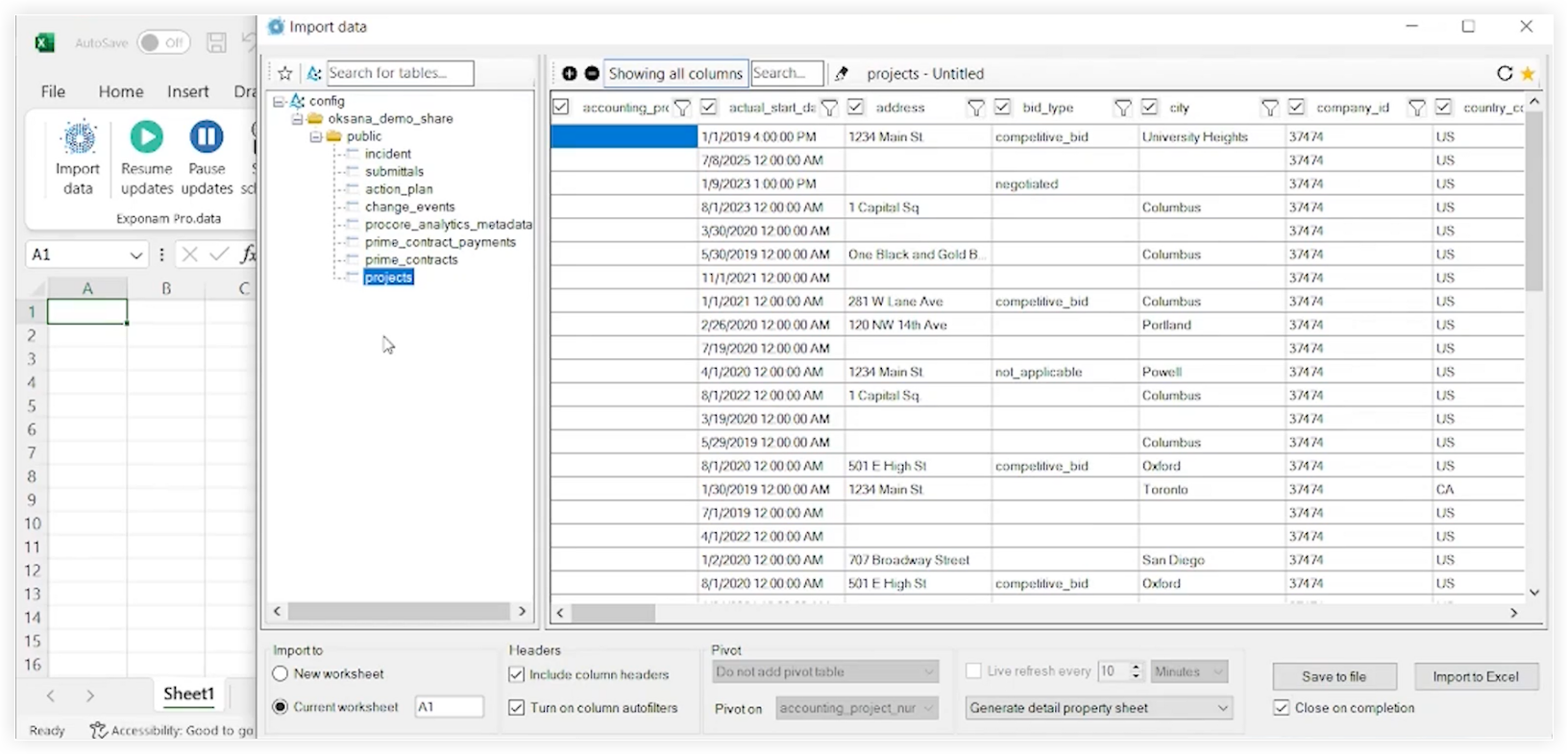

- Connect to Microsoft Excel Using Exponam

Procore Analytics Cloud Connector

Introduction

Cloud Connector is how Procore shares Data, Intelligence, and Analytics with our customers. This can be shared directly to reporting tools like Power BI or Tableau. It can also be shared to customers' data warehouses, stores, lakes, or other applications. Customers can even build programmatic access to their data using Cloud Connector for true automation. Cloud Connector is based on the Delta Share open sharing protocol.

Analytics 2.0 Guided Data Connector Options

Delta Sharing is the industry’s first open protocol for secure data sharing, making it simple to share data with other organizations regardless of which computing platforms they use. Many applications can access data with Delta Share. However, to further enhance the customer experience, Procore has added connectors, prebuilt code, and guides for the following platforms, reducing setup time and complexity to enable a seamless, out-of-the-box connection.

- SQL Server

- SSIS Python

- Python Library

- Python Spark

- Azure

- ADLS Azure Functions

- ADLS Python

- ADLS Spark

- Lakehouse Fabric Data Factory

- Lakehouse Fabric Notebook

- SQL Server Azure Functions

- SQL Server Fabric Data Factory

- SQL Server Fabric Notebook

- Databricks

- Snowflake

- Amazon S3

- Guides to Write Your Own (GitHub)

More data connectors coming soon!

Access Documentation and Code

Comprehensive documentation and code examples are available in the Analytics product directly in the Procore web application accessible by your Procore admins. These resources provide step-by-step instructions, code snippets, and best practices to help you set up and manage your data integration effectively.

Next Steps

Continue to the next section of this guide to begin the setup process.

For additional inquiries or assistance, please contact your account manager or our support team.

Partner Considerations

Please review the following partner considerations below:

Consulting Partners

- Permissions: When using Analytics 2.0, the token you generate will pull data from all companies that your Procore login has access to. If you work with multiple customers, you need to create a separate Procore login for each customer to avoid inadvertently sharing data across customers. Do not use the same login (token) for different customers.

- Support: The partner must provide support related to their datasets and reports.

App Partners

- Bulk access: Whether you expose your data via API or a Power BI custom connector, you’ll need a security model that returns data across all projects with each endpoint. Power BI is geared towards bulk data ingestion and will not be able to scale if it needs to iterate datasets by a project or other unique identifier.

- Multiple datasets: It's common to expose separate datasets for each reportable data entity. Each of these should support the same security model and return data in bulk.

- Security: You’ll need to implement a solution that enables bulk reading of the data with a single set of secure credentials.

- Power BI template: Your Power BI template should work out of the box with any customer who uses it. The Power BI template is loaded into the client’s Power BI tenant. They will configure the datasets with the credentials you provide them.

Verify Permissions

Note

- You must have the Analytics tool enabled at the Company level for your company's Procore account.

- Anyone with 'Admin' level access to the Analytics tool can grant additional users access to the Analytics tool.

- Users must have 'Admin' level access to the Analytics tool to generate an access token.

- Any changes to a user's permissions in the Directory for Analytics will take up to 24 hours to be active.

You must make sure the appropriate permissions are assigned to generate an access token so you can begin connecting your Procore data to your BI solution. Access to Analytics is linked to your Procore login credentials, which allows you to generate a single access token. The access token is a string of digits you will enter in your BI system to access data.

Typically, users who need access tokens are data engineers or Power BI developers. If you have access to Analytics in several companies, your token will allow you to pull data from all of them. The token is tied to you, not to a specific company, so it remains the same across all companies you have access to.

Company and Project Admins will be granted an Admin role by default. The following user access levels are permitted for the Analytics tool:

- None: No access to Analytics data.

- Admin: Has full access permissions to data for all tools and projects (except certain data marked as private such as correspondence data).

There are two ways to assign permissions to individual users:

Revoking Access

Access to data in the Analytics tool will be revoked when a user's permissions for the tool are removed. Additionally, if a user’s contact record becomes inactive, they will also lose access to Analytics data.

Generate Data Access Credentials

To start accessing your Procore data, there are two options for generating your data access credentials: the Databricks direct connection method or the Delta Share Token method. The access token is a string of digits you will enter in your applicable data connector to access data.

Considerations

- You must have the Analytics tool enabled at the Company level for your company's Procore account.

- By default, all Company Admins have 'Admin' level access to Analytics in the Directory.

- Anyone with 'Admin' level access to the Analytics tool can grant additional users access to the Analytics tool.

- Users must have 'Admin' level access to the Analytics tool to generate an access token.

Steps

- Log in to Procore.

- Click the Account & Profile icon in the top-right area of the navigation bar.

- Click My Profile Settings.

- Under Choose Your Connection with Analytics, you have two options to generate credentials:

- Databricks direct connect OR generate a personal access token with Delta Share.

- Enter your Databricks Sharing Identifier for the Databricks direct connection method, then click Connect. See Connect Your Procore Data to a Databricks Workspace to learn more.

- For the token method, select Delta Share Token.

- Make sure to choose an expiration date.

- Click Generate Tokens.

Important! It's recommended that you copy and store your token for future reference since Procore does not store tokens for users. - You will use your Bearer Token, Share Name, Delta sharing server URL and your Share Credentials Version to begin accessing and integrating your data.

- Explore the additional sections of the Getting Started Guide for next steps on connecting your data based on your desired data connection method.

Note

- The token will disappear after one hour or it will also disappear if you navigate away from the page. To generate a new token, return to Step 1.

- It may take up to 24 hours for the data to become visible.

- Please do not regenerate your token during this processing time as doing so may cause issues with your token.

Upload Reports to Power BI (If Applicable)

- Navigate to Analytics from your Company Tools menu.

- Go to the Getting Started section.

- Under Power BI Files, select and download the available Power BI reports.

- Log in to the Power BI service using your Power BI login credentials.

- Create a workspace where you want to store your company's Analytics reports. See Microsoft's Power BI support documentation for more information.

Notes: Licensing requirements may apply. - In the workspace, click Upload.

- Now click Browse.

- Select the report file from its location on your computer and click Open.

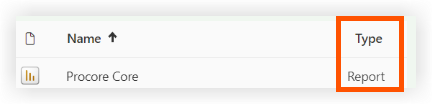

- After uploading the file, click Filter and select Semantic Model.

- Hover your cursor over the row with the report's name and click the vertical ellipsis

icon.

icon. - Click Settings.

- On the settings page, click Data source credentials and then click Edit Credentials.

- In the 'Configure [Report Name]' window that appears, complete the following:

- Authentication Method: Select 'Key'.

- Account Key: Enter the token you received from the token generation page in Procore.

- Privacy level setting for this data source: Select the privacy level. We recommend selecting 'Private' or 'Organizational'. See Microsoft's Power BI support documentation for more information about the privacy levels.

- Click Sign in.

- Click Refresh and do the following:

- Time zone: Select the time zone you want to use for scheduled data refreshes.

- Under Configure a refresh schedule, turn the toggle to the ON position.

- Refresh frequency: Select 'Daily'.

- Time: Click Add another time and select 7:00 a.m.

Note: You may add up to 8 refresh times. - Optional:

- Mark the 'Send refresh failure notifications to the dataset owner' checkbox to send refresh failure notifications.

- Enter the email addresses of any other colleagues you want the system to send refresh failure notifications to.

- Click Apply.

- To verify that the settings were configured correctly and that the report's data will refresh properly, return to the 'Filter and select Semantic Model' page and complete the following steps:

- Hover your cursor over the row with the report's name and click the circular arrow icon to refresh the data manually.

- Check the 'Refreshed' column to see if there is a warning

icon.

icon.

- If no warning icon displays, the report's data is successfully refreshed.

- If a warning icon displays, an error has occurred. Click the warning

icon to see more information about the error.

icon to see more information about the error.

- To delete the blank dashboard the Power BI service created automatically, complete the following steps:

- Hover your cursor over the row with the dashboard's name. Click the ellipsis

icon and click Delete.

icon and click Delete.

- Hover your cursor over the row with the dashboard's name. Click the ellipsis

- To verify that the report renders properly, navigate to the 'All' or 'Content' page and click on the report's name to view the report in the Power BI service.

Tip

Reference the 'Type' column to ensure you click on the report instead of a different asset.

- Repeat the steps above within Power BI for each Analytics report file.

Connect to Power BI Desktop

- Download the zipped package from the company level Analytics tool (via Analytics > Getting Started > Connection Options > PowerBI) in Procore.

Connect to SQL Server Using Python (SSIS)

Overview

The Analytics Cloud Connect Access tool is a command-line interface (CLI) that helps you configure and manage data transfers from Procore to MS SQL Server. It consists of two main components:

- user_exp.py (Configuration setup utility)

- delta_share_to_azure_panda.py (Data synchronization script)

Prerequisites

- Python and pip installed on your system.

- Access to Procore Delta Share.

- MS SQL Server account credentials.

- Download the zipped package from the company level Analytics tool (via Analytics > Getting Started > Connection Options > SQL Server).

- Install required dependencies: pip install -r requirements.txt.

- Delta Sharing profile file:

- Update the token and endpoint received from the Procore UI in the template_config.share file (found in the downloaded contents), and rename template_config.share to config.share.

- Python Environment:

- Install Python 3.9+ and pip on your system.

Steps

- Initial Configuration

- Data Synchronization

- Delta Share Configuration

- MS SQL Server Configuration

- SSIS Configuration

Initial Configuration

- Run the configuration utility:

python user_exp.py

This will help you set up the following:

- Delta Share source configuration

- MS SQL Server target configuration

- Scheduling preferences

Data Synchronization

After configuration, you have two options to run the data sync:

- Direct Execution python

delta_share_to_azure_panda.py

OR - Scheduled Execution

If configured during setup, the job will run automatically according to your cron schedule.

Delta Share Configuration

- Create a new file named config.share with your Delta Share credentials in JSON format.

{

"shareCredentialsVersion": 1,

"bearerToken": "xxxxxxxxxxxxx",

"endpoint": "https://nvirginia.cloud.databricks.c...astores/xxxxxx"

}

- Get required fields:

Note: These details can be obtained from the Analytics web application.- ShareCredentialsVersion: Version number (currently 1).

- BearerToken: Your Delta Share access token.

- Endpoint: Your Delta Share endpoint URL.

- Save the file in a secure location.

- When configuring the data source, you'll be asked to provide:

- List of tables (comma-separated).

- Leave blank to sync all tables.

- Example: `table1, t able2, table3`.

- Path to your `config.share` file.

MS SQL Server Configuration

You'll need to provide the following MS SQL Server details:

- database

- host

- password

- schema

- username

SSIS Configuration

- Using the command line, navigate to the folder by entering 'cd <path to the folder>'.

- Install required packages using 'pip install -r requirements.txt' or 'python -m pip install -r requirements.txt'.

- Open SSIS and create a new project.

- From SSIS Toolbox, drag and drop 'Execute Process Task' activity.

- Double-click on 'Execute Process Task' and navigate to Process tab.

- In 'Executable', enter the path to python.exe in python installation folder.

- In 'WorkingDirectory' enter a path to the folder containing the script you want to execute (without script file name).

- In 'Arguments' enter the name of the script 'delta_share_to_azure_panda.py' you want to execute with the .py extension and save.

- Click on 'Start' button in upper pane:

- During the execution of the task, output of the Python console is displayed in the external console window.

- Once the task is done it will display a green tick:

Connect to SQL Server Using Python Library

Overview

This guide provides detailed instructions for setting up and using the Delta Sharing integration package on a Windows operation system to seamlessly integrate data into your workflows with Analytics. The package supports multiple execution options, allowing you to choose your desired configuration and integration method.

Prerequisites

Ensure you have the following before proceeding:

- Analytics 2.0 SKU

- Delta Sharing profile file:

- Update the token and endpoint received from the Procore UI in the template_config.share file (found in the downloaded contents), and rename template_config.share to config.share.

- Python Environment:

- Install Python 3.9+ and pip on your system.

Steps

- Prepare the Package

- Install Dependencies

- Generate Configuration

- Configure Cron Jobs and Immediate Execution

- Execution and Maintenance

Prepare the Package

- Create a new file named config.share with your Delta Share credentials in JSON format.

{

"shareCredentialsVersion": 1,

"bearerToken": "xxxxxxxxxxxxx",

"endpoint": "https://nvirginia.cloud.databricks.c...astores/xxxxxx"

}

- Get required fields.

Note: These details can be obtained from the Analytics web application.- ShareCredentialsVersion: Version number (currently 1).

- BearerToken: Your Delta Share access token.

- Endpoint: Your Delta Share endpoint URL.

- Download and extract the package.

Note: You can download the zipped package from the company level Analytics tool (via Analytics > Getting Started > Connection Options > SQL Server). - Unzip the package to a directory of your choice.

- Copy the *.share Delta Sharing profile file into the package directory for easy access.

Install Dependencies

- Open a terminal in the package directory.

- Run the following command to install the dependencies:

- pip install -r requirements.txt

Generate Configuration

- Generate the config.yaml file by running python user_exp.py:

This script helps to generate the config.yaml file that contains necessary credentials and settings. - When configuring the data source, you'll be asked to provide:

- List of tables (comma-separated).

- Leave blank to sync all tables.

Example: `table1, table2, table3`. - Path to your `config.share` file.

- For the first time, you will provide your credentials like Delta Share source configuration location, tables, database, host and etc.

Note: Afterwards, you may reuse or update the config manually or by the running python user_exp.py again.

Configure Cron Jobs and Immediate Execution (Optional)

- Decide whether to set up a cron job for automatic execution.

- Provide a cron schedule:

- Format: * * * * * (minute, hour, day-of-month, month, day-of-week).

- Example for daily execution at 2 AM: 0 2 * * *

- To check scheduling logs, the file 'procore_scheduling.log' will be created as soon as scheduling is set up.

You can also check scheduling by running in terminal command:

For Linux and MacOs:

To edit/delete - edit scheduling cron by using:

```bash

EDITOR=nano crontab -e

```

- After running the command above, you should see something similar to:

- 2 * * * * /Users/your_user/snowflake/venv/bin/python /Users/your_user/snowflake/sql_server_python/connection_config.py 2>&1 | while read line; do echo "$(date) - $line"; done >> /Users/your_user/snowflake/sql_server_python/procore_scheduling.log # procore-data-import

- You also can adjust schedule cron or delete the whole line to stop it running by schedule.

For Windows:

- Check the schedule task is created:

```powershell

schtasks /query /tn "ProcoreDeltaShareScheduling" /fo LIST /v

``` - To edit/delete - scheduling task:

Open the Task Scheduler:- Press Win + R, type taskschd.msc, and press Enter.

- Navigate to the scheduled tasks.

- In the left pane, expand the Task Scheduler Library.

- Look for the folder where your task is saved (e.g., Task Scheduler Library or a custom folder).

- Find your task:

- Look for the task name ProcoreDeltaShareScheduling.

- Click on it to view its details in the bottom pane.

- Verify its schedule:

- Check the Triggers tab to see when the task is set to run.

- Check the History tab to confirm recent runs.

- To delete task:

- Delete task from the GUI.

Immediate Execution question:

- Option to run script for copying data immediately after configuration.

- After generating the config.yaml, the CLI is ready to be run anytime independently, by running script for copying data, depending on your package. See examples below:

python delta_share_to_azure_panda.py

OR

python delta_share_to_sql_spark.py

OR

python delta_share_to_azure_dfs_spark.py

Execution and Maintenance

Common Issues and Solutions

- Cron Job Setup:

- Ensure system permissions are correctly configured.

- Check system logs if the job fails to run.

- Verify the script delta_share_to_azure_panda.py has execute permissions.

- Configuration File:

- Ensure the file config.yaml is in the same directory as the script.

- Backup the file before making changes.

Support

For additional help:

- Review script logs for detailed error messages.

- Double-check the config.yaml file for misconfigurations.

- Contact your system administrator for permission-related issues.

- Reach out to Procore support for issues related to Delta Share access.

- Review log for failed tables: failed_tables.log.

Notes

- Always backup your configuration files before making changes.

- Test new configurations in a non-production environment to prevent disruptions.

Connect to SQL Server Using Python Spark

Overview

This guide provides detailed instructions for setting up and using the Delta Sharing integration package on a Windows operation system to seamlessly integrate data into your workflows with Analytics. The package supports multiple execution options, allowing you to choose your desired configuration and integration method.

Prerequisites

Ensure you have the following before proceeding:

- Analytics 2.0 SKU

- Delta Sharing profile file:

- Update the token and endpoint received from the Procore UI in the template_config.share file (found in the downloaded contents), and rename template_config.share to config.share.

- Python Environment:

- Install Python 3.9+ and pip on your system.

Steps

- Prepare the Package

- Install Dependencies

- Generate Configuration

- Configure Cron Jobs and Immediate Execution

- Execution and Maintenance

Prepare the Package

- Create a new file named config.share with your Delta Share credentials in JSON format.

{

"shareCredentialsVersion": 1,

"bearerToken": "xxxxxxxxxxxxx",

"endpoint": "https://nvirginia.cloud.databricks.c...astores/xxxxxx"

}

- Get required fields.

Note: These details can be obtained from the Analytics web application.- ShareCredentialsVersion: Version number (currently 1).

- BearerToken: Your Delta Share access token.

- Endpoint: Your Delta Share endpoint URL.

- Download and extract the package.

Note: You can download the zipped package from the company level Analytics tool (via Analytics > Getting Started > Connection Options > SQL Server). - Unzip the package to a directory of your choice.

- Copy the *.share Delta Sharing profile file into the package directory for easy access.

Install Dependencies

- Open a terminal in the package directory.

- Run the following command to install the dependencies:

- pip install -r requirements.txt

Generate Configuration

- Generate the config.yaml file by running python user_exp.py:

This script helps to generate the config.yaml file that contains necessary credentials and settings. - When configuring the data source, you'll be asked to provide:

- List of tables (comma-separated).

- Leave blank to sync all tables.

Example: `table1, table2, table3`. - Path to your `config.share` file.

- For the first time, you will provide your credentials like Delta Share source configuration location, tables, database, host and etc.

Note: Afterwards, you may reuse or update the config manually or by the running python user_exp.py again.

Configure Cron Jobs and Immediate Execution (Optional)

- Decide whether to set up a cron job for automatic execution.

- Provide a cron schedule:

- Format: * * * * * (minute, hour, day-of-month, month, day-of-week).

- Example for daily execution at 2 AM: 0 2 * * *

- To check scheduling logs, the file 'procore_scheduling.log' will be created as soon as scheduling is set up.

You can also check scheduling by running in terminal command:

For Linux and MacOs:

To edit/delete - edit scheduling cron by using:

```bash

EDITOR=nano crontab -e

```

- After running the command above, you should see something similar to:

- 2 * * * * /Users/your_user/snowflake/venv/bin/python /Users/your_user/snowflake/sql_server_python/connection_config.py 2>&1 | while read line; do echo "$(date) - $line"; done >> /Users/your_user/snowflake/sql_server_python/procore_scheduling.log # procore-data-import

- You also can adjust schedule cron or delete the whole line to stop it running by schedule.

For Windows:

- Check the schedule task is created:

```powershell

schtasks /query /tn "ProcoreDeltaShareScheduling" /fo LIST /v

``` - To edit/delete - scheduling task:

Open the Task Scheduler:- Press Win + R, type taskschd.msc, and press Enter.

- Navigate to the scheduled tasks.

- In the left pane, expand the Task Scheduler Library.

- Look for the folder where your task is saved (e.g., Task Scheduler Library or a custom folder).

- Find your task:

- Look for the task name ProcoreDeltaShareScheduling.

- Click on it to view its details in the bottom pane.

- Verify its schedule:

- Check the Triggers tab to see when the task is set to run.

- Check the History tab to confirm recent runs.

- To delete task:

- Delete task from the GUI.

Immediate Execution question:

- Option to run script for copying data immediately after configuration.

- After generating the config.yaml, the CLI is ready to be run anytime independently, by running script for copying data, depending on your package. See examples below:

python delta_share_to_azure_panda.py

OR

python delta_share_to_sql_spark.py

OR

python delta_share_to_azure_dfs_spark.py

Execution and Maintenance

Common Issues and Solutions

- Cron Job Setup:

- Ensure system permissions are correctly configured.

- Check system logs if the job fails to run.

- Verify the script delta_share_to_azure_panda.py has execute permissions.

- Configuration File:

- Ensure the file config.yaml is in the same directory as the script.

- Backup the file before making changes.

Support

For additional help:

- Review script logs for detailed error messages.

- Double-check the config.yaml file for misconfigurations.

- Contact your system administrator for permission-related issues.

- Reach out to Procore support for issues related to Delta Share access.

- Review log for failed tables: failed_tables.log.

Notes

- Always backup your configuration files before making changes.

- Test new configurations in a non-production environment to prevent disruptions.

Connect to ADLS Using Azure Functions

Overview

This guide walks you through setting up and deploying an Azure Function for integrating Delta Sharing data with Analytics. The Azure Function enables efficient data processing and sharing workflows with Delta Sharing profiles.

Prerequisites

- Analytics 2.0 SKU.

- Delta Sharing profile file:

- Update the token and endpoint received from the Procore UI in the template_config.share file (found in the downloaded contents), and rename template_config.share to config.share.

- Python Environment:

- Install Python 3.9+ and pip on your system.

- Azure Setup:

- Azure CLI installed and logged in.

- Azure Functions Core Tools installed.

Steps

- Prepare the Package

- Install Dependencies

- Generate Configuration

- Azure CLI Setup

- Install Azure Functions Core Tools

- Prepare the Azure Function

- Deployment

- Validation

Prepare the Package

- Download the required package (adls_azure_function or sql_server_azure_function).

Note: You can download the zipped package from the company level Analytics tool (via Analytics > Getting Started > Connection Options > Azure). - Extract the package files to a local directory.

- Place Delta Sharing file:

- Copy your *.share Delta Sharing profile file into the extracted directory.

Install Dependencies

- Open a terminal in the package directory.

- Run the following command to install the required Python dependencies:

- pip install -r requirements.txt

Generate Configuration

- Generate the config.yaml file by running:

- python user_exp.py

- The script will prompt you to enter credentials such as:

- Tables

- Database name

- Host

- Additional credentials..

- The configuration can be reused or updated manually or by re-running python user_exp.py.

Azure CLI Setup

- Log in to Azure.

- Run the following command to log in:

az login - Verify Azure Account:

- az account show

- If the az command is not available, install the Azure CLI by following the instructions found here: Microsoft Learn.

Install Azure Functions Core Tools

Go to Microsoft Learn to for instructions on installing Azure Functions Core Tools.

Prepare the Azure Function

- Use the Azure Portal guide to create the following:

- A function app

- A resource group

- Consumption plan

- Storage account

- Set Custom Cron schedule (Optional).

- Open function_app.py in an editor.

- Locate the line: @app.timer_trigger(schedule="0 0 */8 * * *",

- Replace the schedule with your custom Cron expression and save the file.

Deployment

- Open a terminal in the package directory (adls_azure_function).

- Run the following deployment command:

- func azure functionapp publish <FunctionAppName> --build remote --python --clean

- Replace <FunctionAppName> with the name of your Azure function app in your Azure subscription.

Validation

- Ensure the deployment is successful by checking the Azure Portal for your function app status.

- Monitor logs to verify that the function is executing as expected.

Connect to ADLS Using Python

Overview

This guide provides detailed instructions for setting up and using the Delta Sharing integration package on a Windows operation system to seamlessly integrate data into your workflows with Analytics. The package supports multiple execution options, allowing you to choose your desired configuration and integration method.

Prerequisites

Ensure you have the following before proceeding:

- Analytics 2.0 SKU

- Delta Sharing profile file:

- Update the token and endpoint received from the Procore UI in the template_config.share file (found in the downloaded contents), and rename template_config.share to config.share.

- Python Environment:

- Install Python 3.9+ and pip on your system.

Steps

- Prepare the Package

- Install Dependencies

- Generate Configuration

- Configure Cron Jobs and Immediate Execution

- Execution and Maintenance

Prepare the Package

- Create a new file named config.share with your Delta Share credentials in JSON format.

{

"shareCredentialsVersion": 1,

"bearerToken": "xxxxxxxxxxxxx",

"endpoint": "https://nvirginia.cloud.databricks.c...astores/xxxxxx"

}

- Get required fields.

Note: These details can be obtained from the Analytics web application.- ShareCredentialsVersion: Version number (currently 1).

- BearerToken: Your Delta Share access token.

- Endpoint: Your Delta Share endpoint URL.

- Download and extract the package.

Note: You can download the zipped package from the company level Analytics tool (via Analytics > Getting Started > Connection Options > Azure). - Unzip the package to a directory of your choice.

- Copy the *.share Delta Sharing profile file into the package directory for easy access.

Install Dependencies

- Open a terminal in the package directory.

- Run the following command to install the dependencies:

- pip install -r requirements.txt

Generate Configuration

- Generate the config.yaml file by running python user_exp.py:

This script helps to generate the config.yaml file that contains necessary credentials and settings. - When configuring the data source, you'll be asked to provide:

- List of tables (comma-separated).

- Leave blank to sync all tables.

Example: `table1, table2, table3`. - Path to your `config.share` file.

- For the first time, you will provide your credentials like Delta Share source configuration location, tables, database, host and etc.

Note: Afterwards, you may reuse or update the config manually or by the running python user_exp.py again.

Configure Cron Jobs and Immediate Execution (Optional)

- Decide whether to set up a cron job for automatic execution.

- Provide a cron schedule:

- Format: * * * * * (minute, hour, day-of-month, month, day-of-week).

- Example for daily execution at 2 AM: 0 2 * * *

- To check scheduling logs, the file 'procore_scheduling.log' will be created as soon as scheduling is set up.

You can also check scheduling by running in terminal command:

For Linux and MacOs:

To edit/delete - edit scheduling cron by using:

```bash

EDITOR=nano crontab -e

```

- After running the command above, you should see something similar to:

- 2 * * * * /Users/your_user/snowflake/venv/bin/python /Users/your_user/snowflake/sql_server_python/connection_config.py 2>&1 | while read line; do echo "$(date) - $line"; done >> /Users/your_user/snowflake/sql_server_python/procore_scheduling.log # procore-data-import

- You also can adjust schedule cron or delete the whole line to stop it running by schedule.

For Windows:

- Check the schedule task is created:

```powershell

schtasks /query /tn "ProcoreDeltaShareScheduling" /fo LIST /v

``` - To edit/delete - scheduling task:

Open the Task Scheduler:- Press Win + R, type taskschd.msc, and press Enter.

- Navigate to the scheduled tasks.

- In the left pane, expand the Task Scheduler Library.

- Look for the folder where your task is saved (e.g., Task Scheduler Library or a custom folder).

- Find your task:

- Look for the task name ProcoreDeltaShareScheduling.

- Click on it to view its details in the bottom pane.

- Verify its schedule:

- Check the Triggers tab to see when the task is set to run.

- Check the History tab to confirm recent runs.

- To delete task:

- Delete task from the GUI.

Immediate Execution question:

- Option to run script for copying data immediately after configuration.

- After generating the config.yaml, the CLI is ready to be run anytime independently, by running script for copying data, depending on your package. See examples below:

python delta_share_to_azure_panda.py

OR

python delta_share_to_sql_spark.py

OR

python delta_share_to_azure_dfs_spark.py

Execution and Maintenance

Common Issues and Solutions

- Cron Job Setup:

- Ensure system permissions are correctly configured.

- Check system logs if the job fails to run.

- Verify the script delta_share_to_azure_panda.py has execute permissions.

- Configuration File:

- Ensure the file config.yaml is in the same directory as the script.

- Backup the file before making changes.

Support

For additional help:

- Review script logs for detailed error messages.

- Double-check the config.yaml file for misconfigurations.

- Contact your system administrator for permission-related issues.

- Reach out to Procore support for issues related to Delta Share access.

- Review log for failed tables: failed_tables.log.

Notes

- Always backup your configuration files before making changes.

- Test new configurations in a non-production environment to prevent disruptions.

Connect to ADLS Using Spark

Overview

This guide provides detailed instructions for setting up and using the Delta Sharing integration package on a Windows operation system to seamlessly integrate data into your workflows with Analytics. The package supports multiple execution options, allowing you to choose your desired configuration and integration method.

Prerequisites

Ensure you have the following before proceeding:

- Analytics 2.0 SKU

- Delta Sharing profile file:

- Update the token and endpoint received from the Procore UI in the template_config.share file (found in the downloaded contents), and rename template_config.share to config.share.

- Python Environment:

- Install Python 3.9+ and pip on your system.

Steps

- Prepare the Package

- Install Dependencies

- Generate Configuration

- Configure Cron Jobs and Immediate Execution

- Execution and Maintenance

Prepare the Package

- Create a new file named config.share with your Delta Share credentials in JSON format.

{

"shareCredentialsVersion": 1,

"bearerToken": "xxxxxxxxxxxxx",

"endpoint": "https://nvirginia.cloud.databricks.c...astores/xxxxxx"

}

- Get required fields.

Note: These details can be obtained from the Analytics web application.- ShareCredentialsVersion: Version number (currently 1).

- BearerToken: Your Delta Share access token.

- Endpoint: Your Delta Share endpoint URL.

- Download and extract the package.

Note: You can download the zipped package from the company level Analytics tool (via Analytics > Getting Started > Connection Options > Azure). - Unzip the package to a directory of your choice.

- Copy the *.share Delta Sharing profile file into the package directory for easy access.

Install Dependencies

- Open a terminal in the package directory.

- Run the following command to install the dependencies:

- pip install -r requirements.txt

Generate Configuration

- Generate the config.yaml file by running python user_exp.py:

This script helps to generate the config.yaml file that contains necessary credentials and settings. - When configuring the data source, you'll be asked to provide:

- List of tables (comma-separated).

- Leave blank to sync all tables.

Example: `table1, table2, table3`. - Path to your `config.share` file.

- For the first time, you will provide your credentials like Delta Share source configuration location, tables, database, host and etc.

Note: Afterwards, you may reuse or update the config manually or by the running python user_exp.py again.

Configure Cron Jobs and Immediate Execution (Optional)

- Decide whether to set up a cron job for automatic execution.

- Provide a cron schedule:

- Format: * * * * * (minute, hour, day-of-month, month, day-of-week).

- Example for daily execution at 2 AM: 0 2 * * *

- To check scheduling logs, the file 'procore_scheduling.log' will be created as soon as scheduling is set up.

You can also check scheduling by running in terminal command:

For Linux and MacOs:

To edit/delete - edit scheduling cron by using:

```bash

EDITOR=nano crontab -e

```

- After running the command above, you should see something similar to:

- 2 * * * * /Users/your_user/snowflake/venv/bin/python /Users/your_user/snowflake/sql_server_python/connection_config.py 2>&1 | while read line; do echo "$(date) - $line"; done >> /Users/your_user/snowflake/sql_server_python/procore_scheduling.log # procore-data-import

- You also can adjust schedule cron or delete the whole line to stop it running by schedule.

For Windows:

- Check the schedule task is created:

```powershell

schtasks /query /tn "ProcoreDeltaShareScheduling" /fo LIST /v

``` - To edit/delete - scheduling task:

Open the Task Scheduler:- Press Win + R, type taskschd.msc, and press Enter.

- Navigate to the scheduled tasks.

- In the left pane, expand the Task Scheduler Library.

- Look for the folder where your task is saved (e.g., Task Scheduler Library or a custom folder).

- Find your task:

- Look for the task name ProcoreDeltaShareScheduling.

- Click on it to view its details in the bottom pane.

- Verify its schedule:

- Check the Triggers tab to see when the task is set to run.

- Check the History tab to confirm recent runs.

- To delete task:

- Delete task from the GUI.

Immediate Execution question:

- Option to run script for copying data immediately after configuration.

- After generating the config.yaml, the CLI is ready to be run anytime independently, by running script for copying data, depending on your package. See examples below:

python delta_share_to_azure_panda.py

OR

python delta_share_to_sql_spark.py

OR

python delta_share_to_azure_dfs_spark.py

Execution and Maintenance

Common Issues and Solutions

- Cron Job Setup:

- Ensure system permissions are correctly configured.

- Check system logs if the job fails to run.

- Verify the script delta_share_to_azure_panda.py has execute permissions.

- Configuration File:

- Ensure the file config.yaml is in the same directory as the script.

- Backup the file before making changes.

Support

For additional help:

- Review script logs for detailed error messages.

- Double-check the config.yaml file for misconfigurations.

- Contact your system administrator for permission-related issues.

- Reach out to Procore support for issues related to Delta Share access.

- Review log for failed tables: failed_tables.log.

Notes

- Always backup your configuration files before making changes.

- Test new configurations in a non-production environment to prevent disruptions.

Connect to Fabric Lakehouse Using Data Factory

Overview

Integrating Delta Sharing with Microsoft Fabric Data Factory enables seamless access and processing of shared Delta tables for your analytics workflows with Analytics 2.0. Delta Sharing, an open protocol for secure data collaboration, ensures organizations can share data without duplication.

Prerequisites

- Analytics 2.0 SKU

- Delta Sharing Credentials:

- Obtain the share.json (or equivalent) Delta Sharing credentials file from your data provider.

- This file should include:

- Endpoint URL: The Delta Sharing Server URL.

- Bearer Token: Used for secure data access.

- Microsoft Fabric Setup:

- A Microsoft Fabric tenant account with an active subscription.

- Access to a Microsoft Fabric-enabled workspace.

Steps

- Switch to the Data Factory Experience

- Configure the Dataflow

- Perform Data Transformations

- Validation and Monitoring

Switch to the Data Factory Experience

- Navigate to your Microsoft Fabric Workspace.

- Select New, then choose Dataflow Gen2.

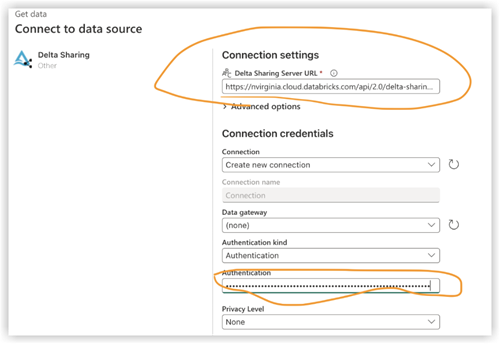

Configure the Dataflow

- Go to the dataflow editor.

- Click Get Data and select More.

- Under New source, select Delta Sharing Other as the data source.

- Enter the following details:

- URL: From your Delta Sharing configuration file.

- Bearer Token: Found in your config.share file.

- Click Next and select the desired tables.

- Click Create to complete the setup.

Perform Data Transformations

After configuring the dataflow, you can now apply transformations to the shared Delta data. Choose your Delta Sharing Data option from the list below:

- Add Data Destination

- Create/Open Lakehouse

Add Data Destination

- Go to Data Factory.

- Click Add Data Destination.

- Select Lakehouse as the target and click Next.

- Choose your destination target and confirm by clicking Next.

Create-Open Lakehouse

- Create/open yourLakehouse and click Get data.

- Select New Dataflow Gen2.

- Click Get Data, then More and find Delta Sharing.

- Enter the URL bearer token from your config.share file and then select Next.

- Choose your data/table/s to download and click Next.

- After these manipulations, you should have all the selected data in your Fabric Lakehouse.

Validation and Monitoring

Test your data pipelines and flows to ensure smooth execution.Use monitoring tools within Data

Factory to track progress and logs for each activity.

Connect to Fabric Lakehouse Using Fabric Notebooks

Overview

Using Data Factory in Microsoft Fabric with Delta Sharing enables seamless integration and processing of shared Delta tables as part of your analytics workflows with Analytics 2.0. Delta Sharing is an open protocol for secure data sharing, allowing collaboration across organizations without duplicating data.

This guide walks you through the steps to set up and use Data Factory in Fabric with Delta Sharing, utilizing Notebooks for processing and exporting data to a Lakehouse.

Prerequisites

- Analytics 2.0 SKU

- Delta Sharing Credentials:

- Access to Delta Sharing credentials provided by a data provider.

- A sharing profile file (config.share) containing:

- Endpoint URL (Delta Sharing Server URL).

- Access Token (Bearer token for secure data access).

- Create your config.yaml file with specific credentials.

- Microsoft Fabric Environment:

- A Microsoft Fabric tenant account with an active subscription.

- A Fabric-enabled Workspace.

- Packages and Scripts:

- Download the fabric-lakehouse package. The directory should include:

- ds_to_lakehouse.py: Notebook code.

- readme.md: Instructions.

Note: You can download the zipped package from the company level Analytics tool (via Analytics > Getting Started > Connection Options > Azure).

- Download the fabric-lakehouse package. The directory should include:

Steps

Set Up Configuration

- Create the config.yaml file and define the configuration in the following structure

source_config:

config_path: path/to/your/delta-sharing-credentials-file.share

tables: # Optional - Leave empty to process all tables

- table_name1

- table_name2

target_config:

lakehouse_path: path/to/your/fabric/lakehouse/Tables/ # Path to the Fabric Lakehouse

Set Up Your Lakehouse

- Open your Microsoft Fabric workspace.

- Navigate to your Lakehouse and click Open Notebook, then New Notebook.

- If you don’t know the value in config.yaml#lakehouse_path, you can copy it from the screen.

- Click the the ellipsis on Files, and select Copy ABFS path:

3. Copy code of ds_to_lakehouse.py and paste into notebook window (Pyspark Python):

The next step is to upload your own config.yaml and config.share into the Resources folder of the Lakehouse. You can create your own directory or use a builtin directory (already created for resources by Lakehouse):

The example below shows a standard builtin directory for a config.yaml file .

Note: Make sure you upload both files on the same level and for the property config_path:

4. Check the code of the notebook, lines 170-175.

The example below shows the necessary line changes:

config_path = "./env/config.yaml"

to

config_path = "./builtin/config.yaml"

Since the files are in a builtin folder and not in a custom env, make sure to monitor your own structure of the files. You can upload them into different folders, but in such cases, update the code of the notebook to find config.yaml file properly.

5. Click Run cell:

Validation

- Once the job completes, verify the data has been copied successfully to your Lakehouse.

- Check the specified tables and ensure the data matches the shared Delta tables.

- Wait until the job is finished, it should copy all the data.

Connect to SQL Server Using Azure Functions

Overview

This guide walks you through setting up and deploying an Azure Function for integrating Delta Sharing data with Analytics. The Azure Function enables efficient data processing and sharing workflows with Delta Sharing profiles.

Prerequisites

- Analytics 2.0 SKU.

- Delta Sharing profile file:

- Update the token and endpoint received from the Procore UI in the template_config.share file (found in the downloaded contents), and rename template_config.share to config.share.

- Python Environment:

- Install Python 3.9+ and pip on your system.

- Azure Setup:

- Azure CLI installed and logged in.

- Azure Functions Core Tools installed.

Steps

- Prepare the Package

- Install Dependencies

- Generate Configuration

- Azure CLI Setup

- Install Azure Functions Core Tools

- Prepare the Azure Function

- Deployment

- Validation

Prepare the Package

- Download the required package (adls_azure_function or sql_server_azure_function).

Note: You can download the zipped package from the company level Analytics tool (via Analytics > Getting Started > Connection Options > Azure). - Extract the package files to a local directory.

- Place Delta Sharing file:

- Copy your *.share Delta Sharing profile file into the extracted directory.

Install Dependencies

- Open a terminal in the package directory.

- Run the following command to install the required Python dependencies:

- pip install -r requirements.txt

Generate Configuration

- Generate the config.yaml file by running:

- python user_exp.py

- The script will prompt you to enter credentials such as:

- Tables

- Database name

- Host

- Additional credentials..

- The configuration can be reused or updated manually or by re-running python user_exp.py.

Azure CLI Setup

- Log in to Azure.

- Run the following command to log in:

az login - Verify Azure Account:

- az account show

- If the az command is not available, install the Azure CLI by following the instructions found here: Microsoft Learn.

Install Azure Functions Core Tools

Go to Microsoft Learn to for instructions on installing Azure Functions Core Tools.

Prepare the Azure Function

- Use the Azure Portal guide to create the following:

- A function app

- A resource group

- Consumption plan

- Storage account

- Set Custom Cron schedule (Optional).

- Open function_app.py in an editor.

- Locate the line: @app.timer_trigger(schedule="0 0 */8 * * *",

- Replace the schedule with your custom Cron expression and save the file.

Deployment

- Open a terminal in the package directory (adls_azure_function).

- Run the following deployment command:

- func azure functionapp publish <FunctionAppName> --build remote --python --clean

- Replace <FunctionAppName> with the name of your Azure function app in your Azure subscription.

Validation

- Ensure the deployment is successful by checking the Azure Portal for your function app status.

- Monitor logs to verify that the function is executing as expected.

Connect to SQL Server Using Data Factory

Overview

This document provides step-by-step instructions for setting up a data pipeline in Microsoft Fabric to transfer data from Delta Share to a SQL warehouse. This configuration enables seamless data integration between Delta Lake sources and SQL destinations.

Prerequisites

- Active Microsoft Fabric account with appropriate permissions.

- Delta Share credentials.

- SQL warehouse credentials.

- Access to Data Flow Gen2 in Fabric.

Steps

- Access Data Flow Gen2

- Configure Data Source

- Set Up Delta Share Connection

- Configure Data Destination

- Finalize and Deploy

- Verification

- Troubleshooting

Access Data Flow Gen2

- Log in to your Microsoft Fabric account.

- Navigate to the workspace.

- Select 'Data Flow Gen2' from the available options.

Configure Data Source

- Click on 'Data from another source' to begin the configuration.

- From the Get Data screen do the following:

- Locate the search bar labeled 'Choose data source'.

- Type 'delta sharing' in the search field.

- Select Delta Sharing from the results.

Set Up Delta Share Connection

- Enter your Delta Share credentials when prompted.

- Ensure all required fields are completed accurately.

- Validate the connection if possible.

- Click 'Next' to proceed.

- Review the list of available tables:

- All tables you have access to will be displayed.

- Select the desired tables for transfer.

Configure Data Destination

- Click 'Add Data Destination'.

- Select 'SQL warehouse' as your destination.

- Enter SQL credentials:

- Server details.

- Authentication information.

- Database specifications.

- Verify the connection settings.

Finalize and Deploy

- Review all configurations.

- Click 'Publish' to deploy the data flow.

- Wait for the confirmation message.

Verification

- Access your SQL warehouse.

- Verify that the data is available and properly structured.

- Run test queries to ensure data integrity.

Troubleshooting

Common issues and solutions:

- Connection failures: Verify credentials and network connectivity.

- Missing tables: Check Delta Share permissions.

- Performance issues: Review resource allocation and optimization settings.

Connect to SQL Server Using Fabric Notebook

Overview

Using Data Factory in Microsoft Fabric with Delta Sharing enables seamless integration and processing of shared Delta tables as part of your analytics workflows with Analytics 2.0. Delta Sharing is an open protocol for secure data sharing, allowing collaboration across organizations without duplicating data.

This guide walks you through the steps to set up and use Data Factory in Fabric with Delta Sharing, utilizing Notebooks for processing and exporting data to a Lakehouse.

Prerequisites

- Analytics 2.0 SKU

- Delta Sharing Credentials:

- Access to Delta Sharing credentials provided by a data provider.

- A sharing profile file (config.share) containing:

- Endpoint URL (Delta Sharing Server URL).

- Access Token (Bearer token for secure data access).

- Create your config.yaml file with specific credentials.

- Microsoft Fabric Environment:

- A Microsoft Fabric tenant account with an active subscription.

- A Fabric-enabled Workspace.

- Packages and Scripts:

- Download the fabric-lakehouse package. The directory should include:

- ds_to_lakehouse.py: Notebook code.

- readme.md: Instructions.

Note: You can download the zipped package from the company level Analytics tool (via Analytics > Getting Started > Connection Options > Azure).

- Download the fabric-lakehouse package. The directory should include:

Steps

Set Up Configuration

- Create the config.yaml file and define the configuration in the following structure

source_config:

config_path: path/to/your/delta-sharing-credentials-file.share

tables: # Optional - Leave empty to process all tables

- table_name1

- table_name2

target_config:

lakehouse_path: path/to/your/fabric/lakehouse/Tables/ # Path to the Fabric Lakehouse

Set Up Your Lakehouse

- Open your Microsoft Fabric workspace.

- Navigate to your Lakehouse and click Open Notebook, then New Notebook.

- If you don’t know the value in config.yaml#lakehouse_path, you can copy it from the screen.

- Click the the ellipsis on Files, and select Copy ABFS path:

3. Copy code of ds_to_lakehouse.py and paste into notebook window (Pyspark Python):

The next step is to upload your own config.yaml and config.share into the Resources folder of the Lakehouse. You can create your own directory or use a builtin directory (already created for resources by Lakehouse):

The example below shows a standard builtin directory for a config.yaml file .

Note: Make sure you upload both files on the same level and for the property config_path:

4. Check the code of the notebook, lines 170-175.

The example below shows the necessary line changes:

config_path = "./env/config.yaml"

to

config_path = "./builtin/config.yaml"

Since the files are in a builtin folder and not in a custom env, make sure to monitor your own structure of the files. You can upload them into different folders, but in such cases, update the code of the notebook to find config.yaml file properly.

5. Click Run cell:

Validation

- Once the job completes, verify the data has been copied successfully to your Lakehouse.

- Check the specified tables and ensure the data matches the shared Delta tables.

- Wait until the job is finished, it should copy all the data.

Connect to Databricks

Note

This method of connection is typically used by data professionals.- Log in to your Databricks environment.

- Navigate to the Catalog section.

- Select Delta Sharing from the top menu.

- Select Shared with me.

- Copy the Sharing Identifier provided for you.

- In Procore, click the Account & Profile icon in the top-right area of the navigation bar.

- Click My Profile Settings.

- Click the Analytics tab.

- Enter your Databricks sharing identifier.

- Click Connect.

Note: Once the sharing identifier is added to Procore's system, the Procore Databricks connection will appear within the Shared with me tab under Providers in your Databricks environment. It may take up to 24 hours to see the data.

- When your Procore Databricks connection becomes visible in the Shared with me tab, select the Procore Indentifier and click Create Catalog.

- Enter your preferred name for the shared catalog and click Create.

- Your shared catalog and tables will now show under the provided name in the Catalog Explorer.

Note: Please reach out to Procore Support if you have any questions or need assistance.

Connect to Snowflake Using Python

Overview

The Analytics Cloud Connect Access tool is a command-line interface (CLI) that helps you configure and manage data transfers from Procore to Snowflake.

It consists of two main components:

- user_exp.py: Configuration setup utility

- ds_to_snowflake.py: Data synchronization script

Prerequisites

- Python is installed on your system

- Access to Procore Delta Share

- Snowflake account credentials

- Download the zipped package from the company level Analytics tool (via Analytics > Getting Started > Connection Options > Snowflake).

- Install the required dependencies using:

- pip install -r requirements.txt

- Delta Sharing profile file:

- Update the token and endpoint received from the Procore UI in the template_config.share file (found in the downloaded contents), and rename template_config.share to config.share.

- Python Environment:

- Install Python 3.9+ and pip on your system.

Steps

- Initial Configuration

- Data Synchronization

- Delta Share Source Configuration

- Snowflake Target Configuration

- Scheduling Options

- Best Practices

- Troubleshooting

Initial Configuration

Run the configuration utility using python user_exp.py.

Data Synchronization

After configuration, you have two options to run the data sync:

- Direct Execution:

- python ds_to_snowflake.py

- Scheduled Execution

- If configured during setup, the job will run automatically according to your Cron schedule.

- To check scheduling logs, the file `procore_scheduling.log` will be created as soon as scheduling will set up.

- Also, you can check scheduling by running in terminal command:

For Linux and MacOS:

- To edit/delete - edit scheduling cron by using:

```bash

EDITOR=nano crontab -e

``` - After running the command above, you should see something similar to:

2 * * * *

/Users/your_user/snowflake/venv/bin/python

/Users/your_user/snowflake/sql_server_python/connection_config.py

2>&1 | while read line; do echo "$(date) - $line"; done>>

/Users/your_user/snowflake/sql_server_python/procore_scheduling.log # procore-data-import - You also can adjust schedule cron or delete the whole line to stop it running by schedule.

For Windows:

- Check that the schedule task is created:

```

powershell

schtasks /query /tn "ProcoreDeltaShareScheduling" /fo LIST /v

```

- To edit/delete scheduling task, open the Task Scheduler.

- Press Win + R, type taskschd.msc, and press enter.

- Navigate to the scheduled tasks.

- In the left pane, expand the Task Scheduler Library.

- Look for the folder where your task is saved:

Example: Task Scheduler Library or a custom folder. - Find your task.

- Look for the task name: ProcoreDeltaShareScheduling.

- Click on it to view the details in the bottom pane.

- Verify its schedule:

- Check the Triggers tab to see when the task is set to run.

- Check the History tab to confirm recent runs.

- To delete task:

- Delete task from the GUI.

Delta Share Configuration

- Creating config.share file

- Before running the configuration utility, you need to create a config.share file with your Delta Share credentials. The file should be in JSON format:

{

"shareCredentialsVersion": 1,

"bearerToken": "xxxxxxxxxxxxx",

"endpoint": "https://nvirginia.cloud.databricks.c...astores/xxxxxx"

}

- Required fields:

- ShareCredentialsVersion: Version number (currently 1).

- BearerToken: Your Delta Share access token.

- Endpoint: Your Delta Share endpoint URL.

- These details can be obtained from the Procore web UI.

- Steps to create config.share:

- Create a new file named config.share.

- Copy the above JSON template.

- Replace the placeholder values with your actual credentials.

- Save the file in a secure location.

- You'll need to provide the path to this file during configuration. When configuring the data source, you'll be asked to provide:

- List of tables (comma-separated).

- Leave blank to sync all tables.

- Example: table1, table2, table3.

- Path to your config.share file.

Snowflake Configuration

You'll need to provide the following Snowflake details:

- Authentication (choose one):

- User Authentication

- Username

- Password (entered securely)

- User Authentication

- Key Pair Authentication

- Username

- Private key file path

- Private key file password

- Connection Details:

- Account identifier

- Warehouse name

- Database name

- Schema name

- Number of concurrent threads

Scheduling Options

The tool offers the ability to schedule automatic data synchronization.

- Cron Job Configuration

- Choose whether to set up a daily job

- If yes, provide a cron schedule

- Format: * * * * * (minute hour day-of-month month day-of-week)

- Example for daily at 2 AM: 0 2 * * *

- Immediate Execution

- Option to run the ds_to_snowflake.py immediately after configuration

- File Structure

Unset

├── requirements.txt # Dependencies

├── user_exp.py # Configuration utility

├── ds_to_snowflake.py # Data sync script

├── config.yaml # Generated configuration

├── config.share # Delta Share config file

├── procore_scheduling.log # Log of scheduling runs

Example Usage- Step 1: Install dependencies

$ pip install -r requirements.txt - Step 2: Run configuration utility

$ python user_exp.py - Procore Analytics Cloud Connect Access

- This CLI will help you choose your source and destination store to access/write Procore data into Snowflake.

- Press Enter to Continue.

- Enter list of tables (comma-separated), leave it blank for all tables: projects,users,tasks.

- Enter path to config.share: /path/to/config.share.

- Enter user name: snowflake_user.

- What authentication type do you want to use? (user/key_pair): Enter.

- 1 for user,

- 2 for key-pair:

- 1

- Enter password: ********

- Enter Account: my_account

- Enter warehouse: my_warehouse

- Enter database name: procore_db

- Enter schema name: procore_schema

- Enter number of threads: 4

- Do you want to configure this as a daily job on cron? (Yes/No): Yes

- Enter the schedule in cron format (e.g., * * * * * ): 0 2 * * *

- Do you want to execute the job now? (Yes/No): Yes

- Step 3: Manual execution (if needed)

$ python ds_to_snowflake.py

- Step 1: Install dependencies

- Configuration Reuse

The tool saves your configuration in the config.yaml file and offers to reuse previously stored settings:- Source configuration can be reused.

- Target (Snowflake) configuration can be reused.

- You can choose to update either configuration independently.

Best Practices

- Authentication

- Use key pair authentication when possible.

- Regularly rotate credentials.

- Use minimal required permissions.

- Performance

- Adjust thread count based on your system capabilities.

- Start with a smaller subset of tables for testing.

Troubleshooting

- Common issues and solutions:

- Invalid Authentication Type

- Ensure to select either '1' (user) or '2' (key_pair) when prompted.

- Invalid Authentication Type

- Cron Job Setup

- Verify you have appropriate system permissions.

- Check system logs if the job fails to run.

- Ensure the ds_to_snowflake.py has correct permissions.

- Verify the cron job setup by checking system logs:

See `procore_scheduling.log` file.

- Configuration File

- Located in the same directory as the script,

- Named config.yaml.

- Backup before making any changes.

- Support

- Check the script's logging output.

- Review your config.yaml file.

- Contact your system administrator for permission-related issues.

- Reach out to Procore support for Delta Share access issues.

Note: Remember to always backup your configuration before making changes and test new configurations in a non-production environment first.

Connect to Amazon S3 Using Python

Overview

The Analytics Cloud Connect Access tool is a command-line interface (CLI) that helps you configure and manage data transfers from Procore to Amazon S3 with Analytics 2.0.

It consists of two main components:

- user_exp.py: Configuration setup utility

- delta_share_to_s3.py: Data synchronization script

Prerequisites

- Analytics 2.0 SKU

- Python is installed on your system

- Access to Procore Delta Share

- S3 Access Keys

- Download the zipped package from the company level Analytics tool (via Analytics > Getting Started > Connection Options > AWS).

- Installation of required dependencies using:

- pip install -r requirements.txt

Steps

- Initial Configuration

- Delta Share Source Configuration

- S3 Configuration

- Scheduling Options

- Best Practices

- Troubleshooting

Initial Configuration

Run the configuration utility using python user_exp.py.

This will help you set up the following:

- Delta Share Configuration

- S3 Target Configuration

- Scheduling Preferences

Delta Share Configuration

- Creating config.share file

- Before running the configuration utility, you need to create a config.share file with your Delta Share credentials. The file should be in JSON format:

{

"shareCredentialsVersion": 1,

"bearerToken": "xxxxxxxxxxxxx",

"endpoint": "xxxxxx"

}

- Required fields:

- ShareCredentialsVersion: Version number (currently 1).

- BearerToken: Your Delta Share access token.

- Endpoint: Your Delta Share endpoint URL.

- These details can be obtained from the Procore web UI.

- Steps to create config.share:

- Create a new file named config.share.

- Copy the above JSON template.

- Replace the placeholder values with your actual credentials.

- Save the file in a secure location.

- You'll need to provide the path to this file during configuration. When configuring the data source, you'll be asked to provide:

- List of tables (comma-separated).

- Leave blank to sync all tables.

- Example: table1, table2, table3.

- Path to your config.share file.

S3 Configuration

You'll need to provide the following S3 details:

- Authentication:

- Access key

- Secret Key

- Bucket name without s3://

- key - directory

Scheduling Options

The tool offers the ability to schedule automatic data synchronization.

- Cron Job Configuration

- Choose whether to set up a daily job.

- If yes, provide a cron schedule.

- Format: * * * * * (minute hour day-of-month month day-of-week).

- Example for daily at 2 AM: 0 2 * * *

- To check scheduling logs, the file 'procore_scheduling.log' will be created as soon as scheduling is set up.

You can also check scheduling by running in terminal command

For Linux and MacOs:

To edit/delete - edit scheduling cron by using:

```bash

EDITOR=nano crontab -e

```

- After running the command above, you should see something similar to:

- 2 * * * * /Users/your_user/snowflake/venv/bin/python /Users/your_user/snowflake/sql_server_python/connection_config.py 2>&1 | while read line; do echo "$(date) - $line"; done >> /Users/your_user/snowflake/sql_server_python/procore_scheduling.log # procore-data-import

- You also can adjust schedule cron or delete the whole line to stop it running by schedule.

For Windows:

- Check the schedule task is created:

```powershell

schtasks /query /tn "ProcoreDeltaShareScheduling" /fo LIST /v

``` - To edit/delete - scheduling task:

Open the Task Scheduler:- Press Win + R, type taskschd.msc, and press Enter.

- Navigate to the scheduled tasks.

- In the left pane, expand the Task Scheduler Library.

- Look for the folder where your task is saved (e.g., Task Scheduler Library or a custom folder).

- Find your task:

- Look for the task name ProcoreDeltaShareScheduling.

- Click on it to view its details in the bottom pane.

- Verify its schedule:

- Check the Triggers tab to see when the task is set to run.

- Check the History tab to confirm recent runs.

- To delete task:

- Delete task from the GUI.

- Immediate Execution

- Option to run the delta_share_to_s3_.py File Structure

-

Unset

├── requirements.txt # Dependencies

├── user_exp.py # Configuration utility

├── delta_share_to_s3.py # Data sync script

├── config.yaml # Generated configuration

├── config.share # Delta Share config file

├── procore_scheduling.log # Log of scheduling runs

Example Usage- Step 1: Install dependencies

$ pip install -r requirements.txt - Step 2: Run configuration utility

$ python user_exp.py - Procore Analytics Cloud Connect Access

- This CLI will help you choose your source and destination store to access/write Procore data into S3.

- Press Enter to Continue.

- Enter list of tables (comma-separated), leave it blank for all tables: projects,users,tasks.

- Enter path to config.share: /path/to/config.share.

- Enter access key: s3 key.

- Enter secret: secret.

- Enter bucket: bucket name.

- Do you want to configure this as a daily job on cron? (Yes/No): Yes

- Enter the schedule in cron format (e.g., * * * * * ): 0 2 * * *

- Do you want to execute the job now? (Yes/No): Yes

- Step 3: Manual execution (if needed)

$ python delta_share_to_s3.py

- Step 1: Install dependencies

- Configuration Reuse

The tool saves your configuration in the config.yaml file and offers to reuse previously stored settings:- Source configuration can be reused.

- Target (S3) configuration can be reused.

- You can choose to update either configuration independently.

Troubleshooting

Common issues and solutions:

- Cron Job Setup

- Ensure system permissions are correctly configured.

- Check system logs if the job fails to run.

- Verify the script (delta_share_to_s3.py) execute permissions.

- Configuration File

- Confirm the file config.yaml is in the same directory as the script.

- Backup before making any changes.

- Support

- Review script logs for detailed error messages.

- Review your config.yaml file for misconfigurations.

- Contact your system administrator for permission-related issues.

- Reach out to Procore support for Delta Share access issues.

- Verify cron job setup by checking system logs: See 'procore_scheduling_log' file.

Notes:

- Remember to always backup your configuration before making changes.

- Test new configurations in a non-production environment first.

Build Your Own Connection

Overview

Delta Sharing is an open protocol for secure real-time data sharing, allowing organizations to share data across different computing platforms. This guide will walk you through the process of connecting to and accessing data through Delta Sharing.

Delta Sharing Connector Options

- Python Connector

- Apache Spark Connector

- Set up an Interactive Shell

- Set up a Standalone Project

Python Connector

The Delta Sharing Python Connector is a Python library that implements the Delta Sharing Protocol to read tables from a Delta Sharing server. You can load shared tables as a pandas DataFrame, or as an Apache Spark DataFrame if running in PySpark with the Apache Spark Connector installed.

System Requirements

- Python 3.8+ for delta-sharing version 1.1+

- Python 3.6+ for older versions

- If running Linux, glibc version >= 2.31

- For automatic delta-kernel-rust-sharing-wrapper package installation, please see next section for more details.

Installation Process

Unset

pip3 install delta-sharing

- If you are using Databricks Runtime, you can follow Databricks Libraries doc to install the library on your clusters.

- If this doesn’t work because of an issue downloading delta-kernel-rust-sharing-wrapper try the following:

- Check python3 version >= 3.8

- Upgrade your pip3 to the latest version

Accessing Shared Data

The connector accesses shared tables based on profile files, which are JSON files containing a user's credentials to access a Delta Sharing server. We have several ways to get started:

Before You Begin

- Download a profile file from your data provider.

Accessing Shared Data Options

After you save the profile file, you can use it in the connector to access shared tables.

import delta_sharing

- Point to the profile file. It can be a file on the local file system or a file on a remote storage.

- profile_file = "<profile-file-path>"

- Create a SharingClient.

- client = delta_sharing.SharingClient(profile_file)

- List all shared tables.

- client.list_all_tables()

- Create a url to access a shared table.

- A table path is the profile file path following with `#` and the fully qualified name of a table.

- (`<share-name>.<schema-name>.<table-name>`).

- table_url = profile_file + "#<share-name>.<schema-name>.<table-name>"

- Fetch 10 rows from a table and convert it to a Pandas DataFrame. This can be used to read sample data from a table that cannot fit in the memory.

- delta_sharing.load_as_pandas(table_url, limit=10)

- Load a table as a Pandas DataFrame. This can be used to process tables that can fit in the memory.

- delta_sharing.load_as_pandas(table_url)

- Load a table as a Pandas DataFrame explicitly using Delta Format

- delta_sharing.load_as_pandas(table_url, use_delta_format = True)

- If the code is running with PySpark, you can use `load_as_spark` to load the table as a Spark DataFrame.

- delta_sharing.load_as_spark(table_url)

- If the table supports history sharing(tableConfig.cdfEnabled=true in the OSS Delta Sharing Server), the connector can query table changes.

- Load table changes from version 0 to version 5, as a Pandas DataFrame.

- delta_sharing.load_table_changes_as_pandas(table_url, starting_version=0, ending_version=5)

- If the code is running with PySpark, you can load table changes as Spark DataFrame.

- delta_sharing.load_table_changes_as_spark(table_url, starting_version=0, ending_version=5)

Apache Spark Connector

The Apache Spark Connector implements the Delta Sharing Protocol to read shared tables from a Delta Sharing Server. It can be used in SQL, Python, Java, Scala and R.

System Requirements

- Java 8+

- Scala 2.12.x