Export to Fabric Lakehouse Using Data Factory

Overview

Integrating Delta Sharing with Microsoft Fabric Data Factory enables seamless access and processing of shared Delta tables for your analytics workflows with Analytics 2.0. Delta Sharing, an open protocol for secure data collaboration, ensures organizations can share data without duplication.

Prerequisites

- Analytics 2.0 SKU

- Delta Sharing Credentials:

- Obtain the share.json (or equivalent) Delta Sharing credentials file from your data provider.

- This file should include:

- Endpoint URL: The Delta Sharing Server URL.

- Bearer Token: Used for secure data access.

- Microsoft Fabric Setup:

- A Microsoft Fabric tenant account with an active subscription.

- Access to a Microsoft Fabric-enabled workspace.

Steps

- Switch to the Data Factory Experience

- Configure the Dataflow

- Perform Data Transformations

- Validation and Monitoring

Switch to the Data Factory Experience

- Navigate to your Microsoft Fabric Workspace.

- Select New, then choose Dataflow Gen2.

Configure the Dataflow

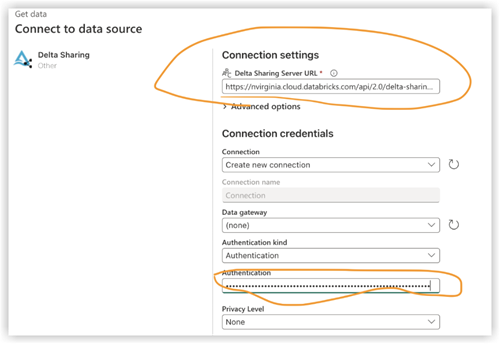

- Go to the dataflow editor.

- Click Get Data and select More.

- Under New source, select Delta Sharing Other as the data source.

- Enter the following details:

- URL: From your Delta Sharing configuration file.

- Bearer Token: Found in your config.share file.

- Click Next and select the desired tables.

- Click Create to complete the setup.

Perform Data Transformations

After configuring the dataflow, you can now apply transformations to the shared Delta data. Choose your Delta Sharing Data option from the list below:

- Add Data Destination

- Create/Open Lakehouse

Add Data Destination

- Go to Data Factory.

- Click Add Data Destination.

- Select Lakehouse as the target and click Next.

- Choose your destination target and confirm by clicking Next.

Create-Open Lakehouse

- Create/open yourLakehouse and click Get data.

- Select New Dataflow Gen2.

- Click Get Data, then More and find Delta Sharing.

- Enter the URL bearer token from your config.share file and then select Next.

- Choose your data/table/s to download and click Next.

- After these manipulations, you should have all the selected data in your Fabric Lakehouse.

Validation and Monitoring

Test your data pipelines and flows to ensure smooth execution.Use monitoring tools within Data

Factory to track progress and logs for each activity.